Partition Projection in the Data Lakehouse: Cheaper Pipelines, Faster Queries

Partition Projection - Rule-based

Partition Projection is a powerful technique in modern data lakehouse architecture that automates Hive-style partition management while preserving the benefits of partition pruning. By eliminating the need to manually track every partition in a catalog, partition projection speeds up query planning and reduces ETL costs. This post explains how partition projection works, why it’s valuable for cloud-agnostic data lakehouse systems, and the considerations and best practices for using partition projections.

Understanding Hive-Style Partitioning and Pruning

In a typical data lakehouse setup, data is stored in a cloud data lake (e.g., Amazon S3) and queried through engines like AWS Athena, Apache Spark, or Trino. Data is often organized using Hive-style partitioning – for example, storing files in paths like s3://bucket/table/date=2025-09-01/region=US/.... Each subfolder encodes a partition value. This structure enables partition pruning in query engines: if a SQL query has WHERE date='2025-09-01', the engine can skip files in other date partitions entirely, scanning only the relevant subset of data. Partitioning thereby improves query performance and lowers cost by reading less data.

However, traditional partitioning requires that every partition (e.g., every date or region) be registered in a catalog (like the AWS Glue Data Catalog or Hive Metastore) as metadata. The catalog stores the list of partitions and their locations. Maintaining this catalog metadata becomes challenging as partitions grow to thousands or millions. Every time new data arrives (say a new date or a new value), you must update the catalog’s partition list, or the query engine won’t see the data. This leads to significant partition management overhead.

The Pain of Manual Partition Management

Managing Hive-style partitions at scale can inflate your ETL pipelines and cloud costs. Data engineers historically used a few approaches to keep partition metadata in sync:

- Manual or Scheduled Updates: Running the

MSCK REPAIR TABLEcommand in Athena or Hive scans the S3 directory structure and adds any missing partitions to the catalog. This needs to be run regularly and can be slow and costly on large tables. - Glue Crawlers or Scripts: Setting up an AWS Glue Crawler or custom scripts (e.g., AWS Lambda triggers) to regularly crawl the storage and update the catalog. This automates partition addition but introduces extra infrastructure and lag. For example, a scheduled Glue crawler or trigger might run every hour, meaning newly arrived data isn’t queryable until after the update runs.

- Over-Provisioning Partitions: Pre-registering all possible partition values (e.g., all dates in a year) in advance. This avoids missing data, but it bloats the catalog with many empty partitions and hurts query performance over time.

These approaches have downsides: increased ETL complexity, delays between data arrival and availability, and mounting ETL costs for maintaining metadata. Additionally, query planning itself can slow down when a table has an enormous number of partitions.

In summary, classic partition management in a data lakehouse comes with maintenance overhead and scaling issues. If only there were a way to automate partition management and remove this burden… This is exactly what Partition Projection offers.

What is Partition Projection?

Partition Projection is a feature that lets you declare a partitioning scheme for a table instead of manually tracking partitions. In essence, the data engineer provides a formula or pattern for partition values, and the query engine projects (computes) the list of partitions on the fly, rather than looking them up in the catalog. This means you no longer need to run crawlers or repair commands for new partitions – the engine knows how to derive the partition locations from your specification.

When partition projection is enabled, the Glue catalog (or Hive metastore) will stop storing individual partition entries for that table. Instead, the table’s definition includes a set of table properties that define the partitioning scheme. Athena (and other engines that support this) use these properties to determine which partitions exist and where to find them. Because this is calculated in-memory using the known patterns, it avoids remote catalog calls and speeds up query planning.

How Partition Projection Works

Hive-style (list-based)

s3://lakehouse/events/year=2025/month=08/day=28/- Requires explicit partition entries in the metastore.

- Adding new partitions = repair jobs or DDL commands.

- Query planning slows as partitions increase.

Partition Projection (rule-based)

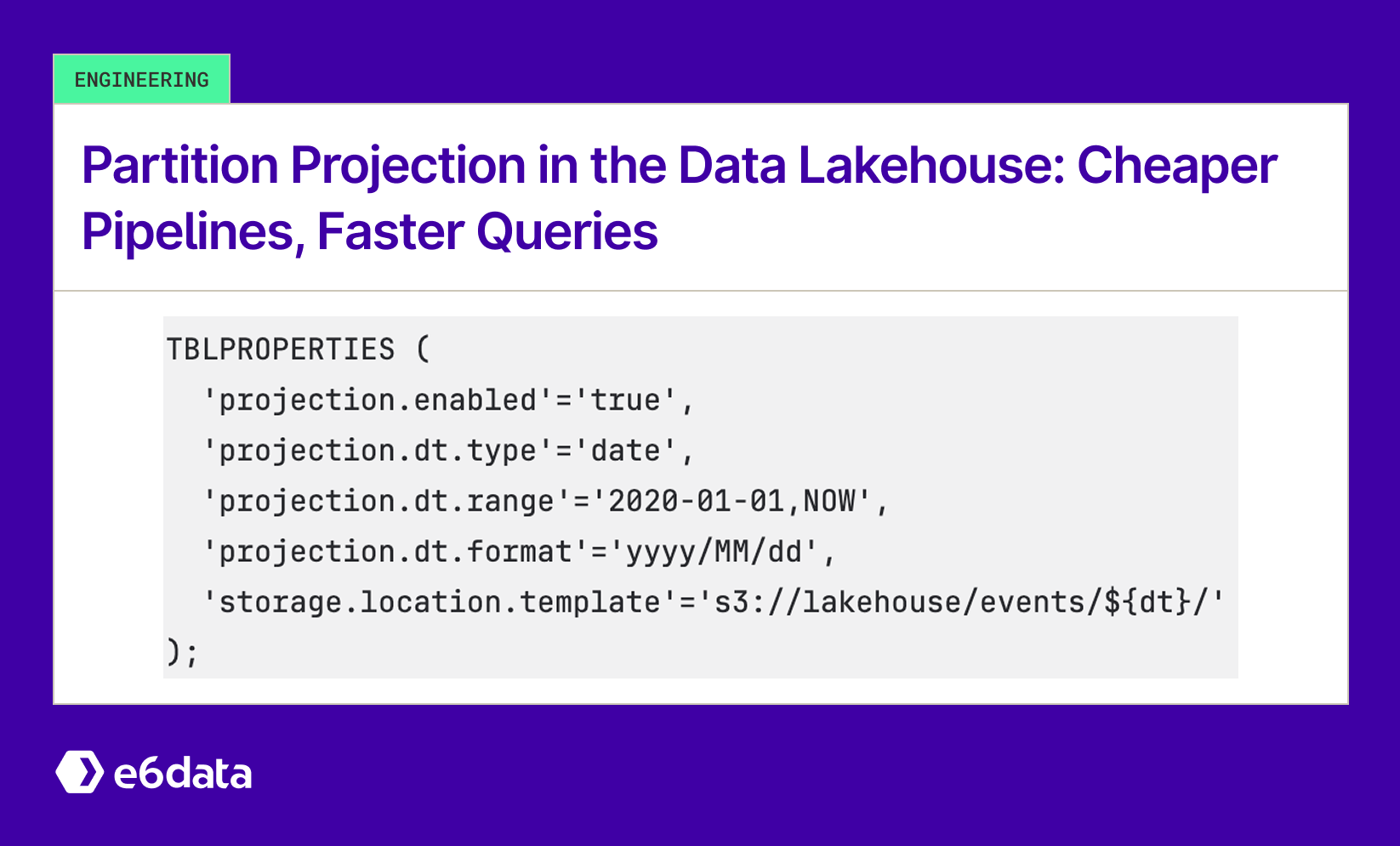

TBLPROPERTIES (

'projection.enabled'='true',

'projection.dt.type'='date',

'projection.dt.range'='2020-01-01,NOW',

'projection.dt.format'='yyyy/MM/dd',

'storage.location.template'='s3://lakehouse/events/${dt}/'

);

Now, when you query:

SELECT * FROM events WHERE dt = '2025-08-28';

The engine directly computes the path:

s3://lakehouse/events/2025/08/28/

No catalog lookups. No repair jobs. Just partition math.

Cost Savings in ETL

One often-overlooked benefit of Partition Projection is lower ETL costs.

In Hive-style partitioning, every new partition must be added to the catalog during ingestion. At scale, this means:

- Extra ETL steps – jobs spend time running

ALTER TABLE ADD PARTITIONfor every daily/hourly folder. - API charges – services like AWS Glue charge per metadata call — registering thousands of partitions per day quickly adds up.

- Longer pipelines – repair jobs can take hours for large tables, delaying data availability.

- Conversion overhead – many teams even run extra ETL steps to convert clean date-based layouts into Hive-style

col=valuepaths just to make query engines understand them. This adds unnecessary I/O and compute cost.

With Partition Projection, you skip all of this:

- Your ETL just writes data to the correct path (e.g.,

s3://lakehouse/events/2025/08/28/). - The query engine automatically understands it based on rules.

- No partition registration, no repair jobs, no format conversion.

This makes ETL pipelines cheaper, faster, and simpler — while still keeping query performance intact.

Hive-Style Partitioning

-----------------------

ETL job --> Write data --> Register partitions in catalog --> Query reads

Partition Projection

--------------------

ETL job --> Write data (no registration, no conversion) --> Query applies rules --> Reads directly

(List-based vs rule-based flow for ingestion + querying)

Benefits of Partition Projection

Partition projection brings several benefits for data engineers and the data lakehouse environment:

- Automated partition management – no more manual

MSCK REPAIRor Glue crawlers. - Faster query planning – avoids catalog lookups, enabling quicker partition pruning.

- Lower ETL costs – skips extra jobs for partition registration or conversion.

- Reduced metadata overhead – prevents catalog bloat from millions of partitions.

- Cloud-agnostic – works across S3, ADLS, GCS, and engines like e6data, Athena, or Trino.

Considerations and Best Practices

While partition projection is extremely useful, keep in mind a few considerations:

- Injected partitions – Queries must always include a filter on injected partition columns; otherwise, they will execute, but with bad performance.

- Catalog behavior – With

projection.enabled=true, engines like e6data ignore manually added partitions. Ensure your projection rules cover all data ranges. - Projection ranges – Avoid overly broad ranges or massive enums (can create millions of theoretical partitions and inflate metadata). Use realistic intervals like daily instead of per-second.

- Data presence – Engines assume projected partitions exist. Missing files return empty results; monitor ETL quality.

Conclusion

Partition Projection is a practical optimization for the data lakehouse, replacing manual Hive-style catalog updates with rule-based partitioning. It automates partition management by removing the need for MSCK REPAIR or Glue crawlers, speeds up query performance through efficient partition pruning, and lowers ETL costs by eliminating partition registration and conversion steps. Because it is cloud-agnostic and scales seamlessly to billions of partitions, partition projection reduces metadata overhead while ensuring faster queries and simpler pipelines. In short, it’s an essential best practice for modern, scalable data lakehouse architectures.

Frequently asked questions (FAQs)

We are universally interoperable and open-source friendly. We can integrate across any object store, table format, data catalog, governance tools, BI tools, and other data applications.

We use a usage-based pricing model based on vCPU consumption. Your billing is determined by the number of vCPUs used, ensuring you only pay for the compute power you actually consume.

We support all types of file formats, like Parquet, ORC, JSON, CSV, AVRO, and others.

e6data promises a 5 to 10 times faster querying speed across any concurrency at over 50% lower total cost of ownership across the workloads as compared to any compute engine in the market.

We support serverless and in-VPC deployment models.

We can integrate with your existing governance tool, and also have an in-house offering for data governance, access control, and security.

.svg)

%20(1).svg)

.svg)

.png)

.svg)

.svg)

.svg)

.svg)

.png)

.svg)