Procedural Power, Set-speed: Inside e6data’s Froid-inspired UDF Engine

Multi-statement UDF

Picture the full expressive power of procedural code (variables, loops, even branching logic) living right inside your SQL, but without the notorious performance tax that usually comes with it. SQL User-Defined Functions (UDFs) were invented to give analysts that superpower, yet they often backfire when every call triggers a slow, row-by-row detour away from the query optimizer. At e6data, we decided that the trade-off was unacceptable, so we modified the rules. Inspired by Microsoft’s Froid framework and the wave of research that followed, our engine automatically inlines UDFs, fusing their logic back into the set-oriented query plan where it belongs. The result? Cleaner code, richer abstraction, and orders-of-magnitude faster execution, without asking developers to rewrite a single line. This post flags off a series where we’ll simplify UDFs, expose the bottlenecks, and then dive deep into the algorithms that enable e6data to make “procedural” feel as fast as “pure SQL.”

Why do UDFs Matter?

A SQL User-Defined Function, or UDF, is exactly what its name suggests: a function that you write and register inside the database to perform custom logic. Every major relational engine has supported UDFs for years, and cloud platforms such as Azure SQL Database log tens of millions of UDF definitions and billions of daily invocations. Clearly, developers love them, but there’s more to the story.

Why do Developers Reach for UDFs?

With benefits like these, UDFs have become a staple of real-world SQL development. Seasoned DBAs, however, know the catch: performance pain. To see why, we first need to distinguish the two broad flavors of UDFs.

What are the Differences between Inline and Multi-Statement UDFs?

Despite their elegance and convenience, UDFs aren’t all built alike. The moment a developer moves from a tidy one-liner to a block with variables and loops, the database stops treating the function as part of the set-based plan and starts executing it row-by-row. That line in the sand, between inline and multi-statement UDFs, is exactly where performance fortunes diverge. Before we dive into how e6data erases that penalty, let’s clarify what distinguishes these two flavors and why the choice can make or break a query’s speed.

If your function is a simple expression, life is good. The database can slide it into the main plan and keep everything set-oriented. The moment you add loops, branches, or multiple statements, you cross into multi-statement territory. That’s where the pain starts.

Why do Traditional UDFs Slow You Down?

- Context-Switch Overhead

Every invocation bounces the engine from set-oriented execution to a procedural mini-interpreter and back. Thousands of hops = thousands of stalls. - Row-by-Row (RBAR) Execution

A multi-statement UDF inside a SELECT list often fires once per output row. A 100 K-row result becomes 100 K miniature procedures, usually single-threaded. - Optimizer Blindness

The planner can’t peek inside the function, so it can’t reorder joins, push predicates, or parallelize. Lacking estimates, it often chooses the safest and slowest plan: evaluate the UDF verbatim for every row.

In combination, these flaws can throttle queries by 10x, 100x, even 1000x compared with set-based SQL.

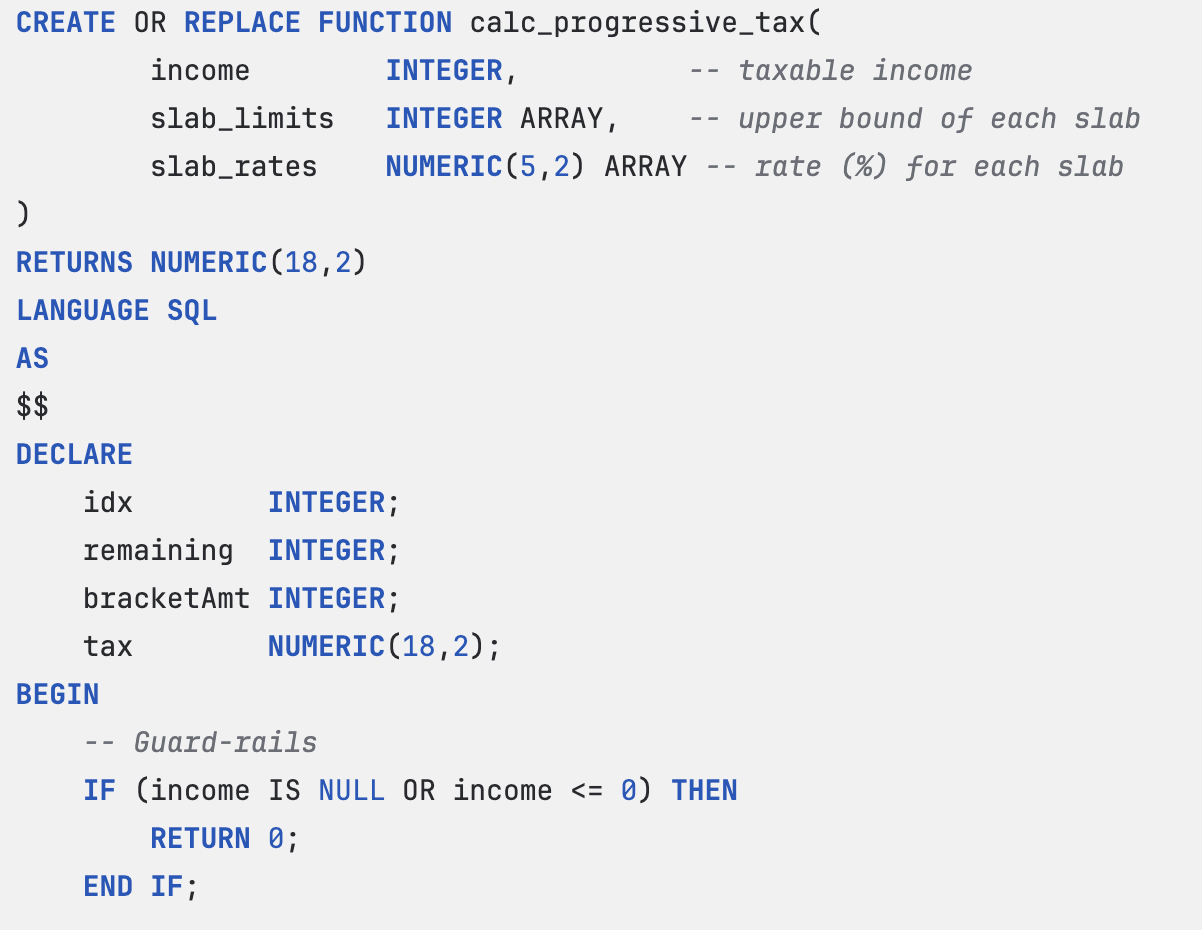

Let’s understand how UDF works by taking an example business rule wrapped in a multi-statement UDF

CREATE OR REPLACE FUNCTION calc_progressive_tax(

income INTEGER, -- taxable income

slab_limits INTEGER ARRAY, -- upper bound of each slab

slab_rates NUMERIC(5,2) ARRAY -- rate (%) for each slab

)

RETURNS NUMERIC(18,2)

LANGUAGE SQL

AS

$$

DECLARE

idx INTEGER;

remaining INTEGER;

bracketAmt INTEGER;

tax NUMERIC(18,2);

BEGIN

-- Guard‑rails

IF (income IS NULL OR income <= 0) THEN

RETURN 0;

END IF;

remaining := income;

tax := 0;

idx := 1;

-- Walk the slabs one‑by‑one

WHILE (idx <= CARDINALITY(slab_limits))

DO

BEGIN

-- all income consumed → early exit

IF (remaining <= 0) THEN

BREAK;

END IF;

-- amount taxed in this slab

bracketAmt := LEAST(remaining, slab_limits[idx]);

-- add slab’s contribution

tax := tax + bracketAmt * slab_rates[idx] / 100;

-- move to next slab

remaining := remaining - bracketAmt;

idx := idx + 1;

END;

END WHILE;

RETURN tax;

END

$$

A seemingly innocent payroll query that calls the UDF:

SELECT

e.emp_id,

e.name,

calc_progressive_tax(e.salary,

ARRAY[250000,500000,1000000],

ARRAY[5,20,30]) AS tax_due

FROM employee AS e

WHERE e.fiscal_year = 2025;

Under the hood, vanilla lakehouse engines still execute it like this, and the pain is familiar

The result? 50,000 employees = 50,000 miniature procedures plus tens of thousands of context switches. This is a textbook RBAR collapse.

As with the original loyalty‑tier example, the optimizer is blind to slab predicates, can’t push filters, and usually falls back to single‑threaded nested loops, i.e., the classic “avoid procedural UDFs in critical paths” warning.

Together, these issues can turn a neat, reusable abstraction into a 10x, 100x, or even 1000x slowdown. That’s why many DBAs warn, “Avoid UDFs in critical paths.”

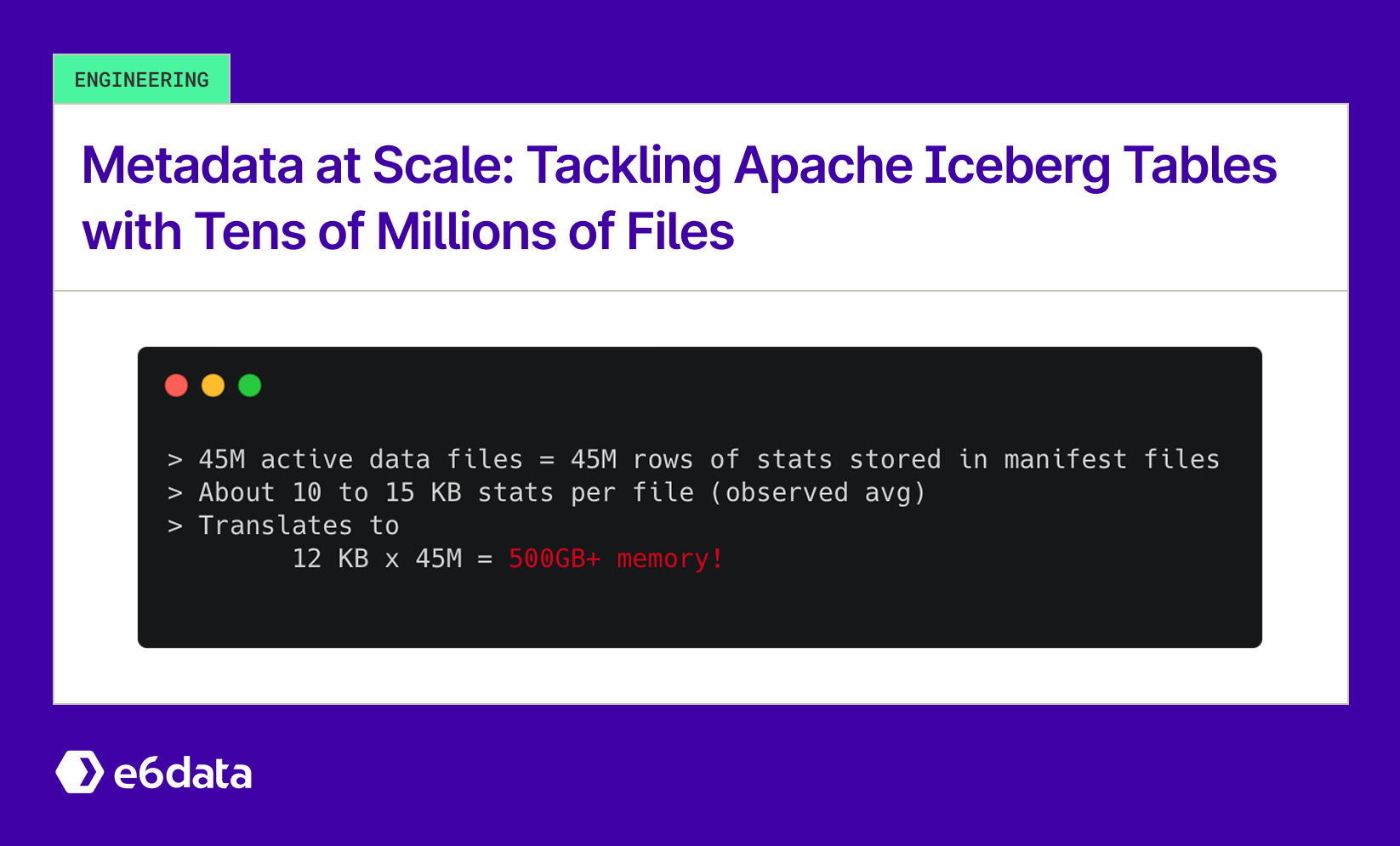

Databricks and Snowflake do expose “SQL UDFs,” but only in the most limited, macro-like form: the body must be a single expression or query (no variables, no loops, no multi-statement logic) - CREATE FUNCTION (SQL and Python) | Databricks Documentation and Scalar SQL UDFs | Snowflake Documentation. As a result, developers quickly bump into those guardrails and fall back to external Python/Java UDFs or stored procedures, forfeiting optimizer insight and lakehouse performance.

e6data takes a different route: We are among the first lakehouse engines to bring full Froid-style inlining of multi-statement SQL UDFs into the platform. Instead of forcing users to shrink their logic (or move it out of SQL), we melt the procedural wrapper into a single relational plan the optimizer can chew on. So, no rewrites, no compromises.

For years, that warning felt like the end of the conversation: either abandon multi-statement UDFs or accept the drag they impose. But in database research circles, a quiet revolution was brewing. Starting with Microsoft’s landmark Froid paper, engineers showed that the real fix isn’t to delete UDFs, but to dissolve the procedural shell and fold its logic back into the relational plan. In other words, let the optimizer see everything, then do what it does best. We embraced and extended that idea inside our e6data engine. Instead of telling users to rewrite code, we rewrite the execution model: our inliner automatically transforms the example function above into a single, set-oriented expression the optimizer can streamline and parallelize at will.

WITH RECURSIVE "run" ("state", "prev_state", "rec", "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__", "return_value", "iterations") AS (

SELECT 'entry', NULL, TRUE, "income", "slab_limits", "slab_rates", NULL, NULL, NULL, NULL, NULL, NULL, 0

UNION ALL

SELECT *

FROM (SELECT CASE WHEN "income" IS NULL OR "income" <= 0 THEN 'IF_MERGE_0' ELSE 'IF_TRUE_0' END, 'entry', TRUE, "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__", NULL, "iterations" + 1

FROM "run"

WHERE "state" = 'entry'

UNION ALL

SELECT 'RETURN_BLOCK_0', 'IF_TRUE_0', TRUE, "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__#3", NULL, "iterations" + 1

FROM "run",

LATERAL (SELECT 0 AS "__RETURN_VAR__#3")

WHERE "state" = 'IF_TRUE_0'

UNION ALL

SELECT 'WHILE_CONDITION_0', 'IF_MERGE_0', TRUE, "income", "slab_limits", "slab_rates", "idx#2", "remaining#2", "bracketAmt", "tax#2", "__RETURN_VAR__", NULL, "iterations" + 1

FROM "run",

LATERAL (SELECT "income" AS "remaining#2"),

LATERAL (SELECT 0 AS "tax#2"),

LATERAL (SELECT 1 AS "idx#2")

WHERE "state" = 'IF_MERGE_0'

UNION ALL

SELECT 'RETURN_BLOCK_0', 'RETURN_BLOCK_0', FALSE, "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__", "__RETURN_VAR__", "iterations" + 1

FROM "run"

WHERE "state" = 'RETURN_BLOCK_0'

UNION ALL

SELECT CASE WHEN "idx" <= CARDINALITY("slab_limits") THEN 'WHILE_MERGE_0' ELSE 'WHILE_BODY_0' END, 'WHILE_CONDITION_0', TRUE, "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__", NULL, "iterations" + 1

FROM "run"

WHERE "state" = 'WHILE_CONDITION_0'

UNION ALL

SELECT CASE WHEN "remaining" <= 0 THEN 'IF_MERGE_1' ELSE 'WHILE_MERGE_0' END, 'WHILE_BODY_0', TRUE, "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__", NULL, "iterations" + 1

FROM "run"

WHERE "state" = 'WHILE_BODY_0'

UNION ALL

SELECT 'RETURN_BLOCK_0', 'WHILE_MERGE_0', TRUE, "income", "slab_limits", "slab_rates", "idx", "remaining", "bracketAmt", "tax", "__RETURN_VAR__#2", NULL, "iterations" + 1

FROM "run",

LATERAL (SELECT "tax" AS "__RETURN_VAR__#2")

WHERE "state" = 'WHILE_MERGE_0'

UNION ALL

SELECT 'WHILE_CONDITION_0', 'IF_MERGE_1', TRUE, "income", "slab_limits", "slab_rates", "idx#4", "remaining#4", "bracketAmt#3", "tax#4", "__RETURN_VAR__", NULL, "iterations" + 1

FROM "run",

LATERAL (SELECT LEAST("remaining", "slab_limits"["idx"]) AS "bracketAmt#3"),

LATERAL (SELECT "tax" + "bracketAmt#3" * "slab_rates"["idx"] / 100 AS "tax#4"),

LATERAL (SELECT "remaining" - "bracketAmt#3" AS "remaining#4"),

LATERAL (SELECT "idx" + 1 AS "idx#4")

WHERE "state" = 'IF_MERGE_1') AS "transitions"

WHERE "iterations" < 100 AND "rec" = TRUE)

SELECT "return_value"

FROM "run"

WHERE "rec" = FALSE

Now the optimizer “sees” everything: parallelize the join, and eliminate every context switch. Execution time plummets, while developers keep writing clear, modular UDFs.

In our next posts, we’ll walk through how research breakthroughs like Microsoft’s Froid taught the industry to inline multi-statement UDFs (transforming them back into set-oriented plans) and how we built that capability natively into our e6data engine.

Stay tuned! The deep dive into the inlining algorithm is where the real fun begins!

Resource

Froid: Optimization of Imperative Programs in a Relational Database

Frequently asked questions (FAQs)

We are universally interoperable and open-source friendly. We can integrate across any object store, table format, data catalog, governance tools, BI tools, and other data applications.

We use a usage-based pricing model based on vCPU consumption. Your billing is determined by the number of vCPUs used, ensuring you only pay for the compute power you actually consume.

We support all types of file formats, like Parquet, ORC, JSON, CSV, AVRO, and others.

e6data promises a 5 to 10 times faster querying speed across any concurrency at over 50% lower total cost of ownership across the workloads as compared to any compute engine in the market.

We support serverless and in-VPC deployment models.

We can integrate with your existing governance tool, and also have an in-house offering for data governance, access control, and security.

.svg)

%20(1).svg)

.svg)

.png)

.svg)

.svg)

.svg)

.svg)

.png)

.svg)