Improved open-table analytics stack with Iceberg, Polaris, Hudi, Delta Lake

Product Update – Enhanced Open-Table Support

TL;DR: Connect once, query everywhere. Our latest release provides advanced support for Apache Iceberg, Apache Polaris, Apache Hudi, and Delta Lake, enabling you to run fast SQL queries across every open table format without copying data or rewriting pipelines.

Why does this matter for your lakehouse?

Open table formats have become the backbone of modern lakehouses. Iceberg delivers petabyte-scale performance and versioned metadata. Polaris adds a cloud-native catalog with enterprise-grade governance, while Delta Lake brings ACID guarantees and time-travel to the data lake. Teams want the freedom to mix these technologies without the pain of managing multiple engines, drivers, and security models.

With this improved support, e6data’s query engine supports all four dialects natively, consolidating your existing catalogs into a single workspace.

What’s new in this release?

How it works (architecture and performance path)

- Smart Catalog Layer – e6data now understands Iceberg and Delta layout metadata natively and communicates with Polaris through the same REST interface Iceberg uses.

- Zero-copy ingestion – Data stays in your object-storage (be it S3, GCS, or ADLS). We only read the files your query touches, reducing scan costs and time.

- Cross-catalog joins – Need to join a Delta customer table with an Iceberg events log? One SQL query across all.

Fine-grained security – We respect Polaris RBAC and Unity Catalog privileges automatically, so users see only what they’re allowed to see.

Quick start

- Create or select a workspace in the e6data console.

- Add catalog → Polaris / Iceberg / Hudi / Delta (choose one or all)

- Run SHOW TABLES FROM <catalog>.<namespace>; to confirm visibility.

- Point your BI tool (Tableau, Power BI, Looker) at the e6data endpoint and start exploring.

Reference links

- Apache Polaris documentation

- Apache Iceberg documentation

- Apache Hudi documentation

- Delta Lake documentation

Roadmap: write support and deeper governance

- Write-support for Iceberg and Delta—including MERGE, UPDATE, and DELETE—is on the roadmap.

- Table-level RBAC in Polaris and automatic metadata sync across engines are in active development.

Frequently asked questions (FAQs)

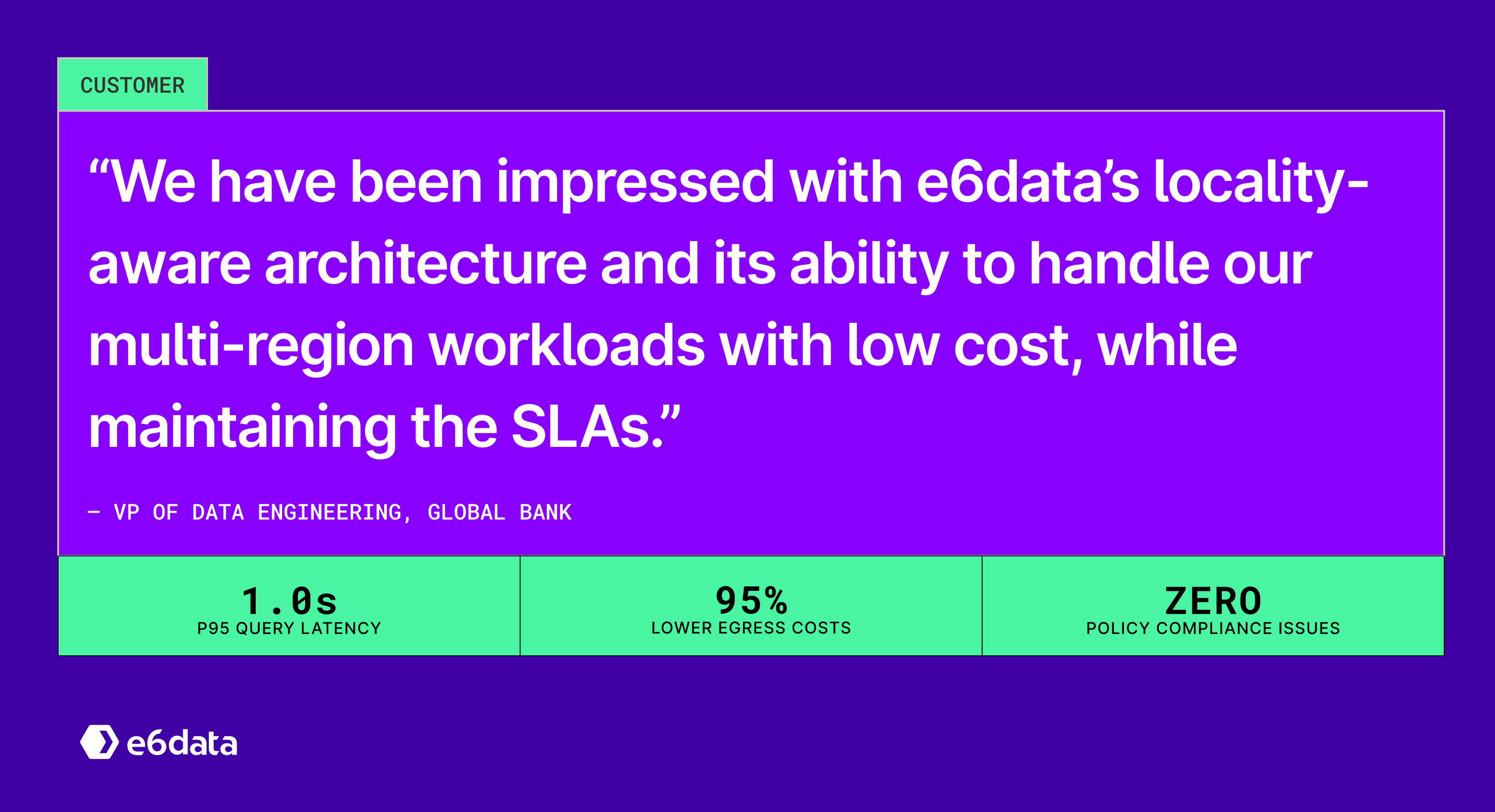

We are universally interoperable and open-source friendly. We can integrate across any object store, table format, data catalog, governance tools, BI tools, and other data applications.

We use a usage-based pricing model based on vCPU consumption. Your billing is determined by the number of vCPUs used, ensuring you only pay for the compute power you actually consume.

We support all types of file formats, like Parquet, ORC, JSON, CSV, AVRO, and others.

e6data promises a 5 to 10 times faster querying speed across any concurrency at over 50% lower total cost of ownership across the workloads as compared to any compute engine in the market.

We support serverless and in-VPC deployment models.

We can integrate with your existing governance tool, and also have an in-house offering for data governance, access control, and security.

.svg)

%20(1).svg)

.png)

.jpg)

.svg)

.png)

.svg)

.svg)

.svg)

.svg)

.png)

.svg)