BigQuery's serverless architecture offers incredible scale, but without proper optimization, teams often face slot shortages during peak hours, runaway query costs, and frustrated business users abandoning self-service analytics. The key lies in understanding BigQuery's unique execution model: how slots work, when to use clustering vs partitioning, and how to structure queries that take advantage of BigQuery's distributed processing power. This playbook provides battle-tested optimization strategies specifically designed for enterprise and mid-market teams managing complex analytical workloads at scale.

When your executive dashboards consistently filter by the same dimensions - region, product_category, or date ranges - BigQuery's clustering delivers sub-second query performance by physically co-locating related data. You'll see the most dramatic improvements on tables larger than 1GB where dashboard queries regularly filter on the clustered columns.

Here's what happens when you implement clustering on a sales dashboard that filters by region and date:

1-- Create clustered table for dashboard performance

2CREATE OR REPLACE TABLE `project.dataset.sales_clustered`

3PARTITION BY sale_date

4CLUSTER BY region, product_category

5AS SELECT

6 region,

7 sale_date,

8 revenue,

9 order_id,

10 customer_id,

11 product_category

12FROM `project.dataset.sales_raw`;

13

14-- Dashboard query leverages clustering

15SELECT

16 region,

17 SUM(revenue) as total_revenue,

18 COUNT(order_id) as order_count

19FROM `project.dataset.sales_clustered`

20WHERE region IN ('US-WEST', 'US-EAST')

21 AND sale_date BETWEEN '2024-01-01' AND '2024-12-31'

22GROUP BY region;This clustering strategy reduces data scanning from terabytes to gigabytes because BigQuery only reads the relevant clustered blocks.

Alternatives: Partitioning by date works well for time-series dashboards but lacks the multi-dimensional optimization that clustering provides. Materialized views offer even faster performance for highly repetitive dashboard queries, though they add storage costs and complexity. For workloads requiring sub-second latency across multiple filter dimensions, e6data's decentralized architecture eliminates coordinator bottlenecks entirely, delivering consistent performance regardless of concurrent dashboard users at 1000+ QPS.

Dashboard queries that repeatedly aggregate the same metrics - monthly revenue, top products, user engagement scores - benefit enormously from materialized views. When you pre-compute these aggregations, your dashboard queries execute in milliseconds instead of seconds, and BigQuery automatically maintains the views as underlying data changes.

The magic happens when multiple dashboards query the same business metrics but with different filters:

1-- Create materialized view for common dashboard metrics

2CREATE MATERIALIZED VIEW `project.dataset.daily_sales_summary`

3PARTITION BY sale_date

4CLUSTER BY region, product_category

5AS SELECT

6 DATE(order_timestamp) as sale_date,

7 region,

8 product_category,

9 SUM(revenue) as daily_revenue,

10 COUNT(DISTINCT customer_id) as unique_customers,

11 COUNT(order_id) as order_count,

12 AVG(order_value) as avg_order_value

13FROM `project.dataset.orders`

14GROUP BY 1, 2, 3;

15

16-- Dashboard queries now execute in milliseconds

17SELECT

18 region,

19 SUM(daily_revenue) as total_revenue,

20 SUM(unique_customers) as total_customers

21FROM `project.dataset.daily_sales_summary`

22WHERE sale_date BETWEEN '2024-09-01' AND '2024-09-30'

23 AND region = 'US-WEST'

24GROUP BY region;BigQuery automatically refreshes materialized views, so your dashboards always show current data without manual intervention.

Alternatives: Scheduled queries with destination tables provide similar performance benefits but require manual refresh logic. BI Engine offers sub-second dashboard performance through in-memory caching, though it's limited to specific data sizes and query patterns. For teams needing guaranteed sub-second latency with complex dashboard hierarchies, e6data's stateless architecture scales to 1000+ concurrent users without the memory limitations of traditional BI acceleration layers.

When your data science team builds new dashboard prototypes, they don't need to query entire production datasets to validate visualizations and logic. BigQuery's table sampling lets you work with statistically representative data subsets, dramatically reducing development costs and iteration time while maintaining query pattern accuracy.

Here's how you implement intelligent sampling for dashboard development workflows:

1-- Sample 1% of data for dashboard prototyping

2SELECT

3 product_category,

4 customer_segment,

5 AVG(revenue) as avg_revenue,

6 COUNT(*) as transaction_count

7FROM `project.dataset.sales_data` TABLESAMPLE SYSTEM (1 PERCENT)

8WHERE transaction_date >= '2024-01-01'

9GROUP BY 1, 2

10ORDER BY avg_revenue DESC;Alternatives: Creating dedicated development datasets with subset data provides predictable performance but requires manual data pipeline maintenance. Query result caching helps with repeated development queries but doesn't address the fundamental cost issue.

Executive dashboards displaying user counts, unique visitors, or distinct product views don't always need exact precision - especially when approximate results load in 2 seconds versus exact counts taking 30 seconds. BigQuery's approximation functions like APPROX_COUNT_DISTINCT deliver 99%+ accuracy while scanning dramatically less data.

Here's how approximation transforms dashboard performance for high-cardinality metrics:

1-- Approximate dashboard metrics for real-time performance

2SELECT

3 DATE(event_timestamp) as event_date,

4 event_type,

5 APPROX_COUNT_DISTINCT(user_id) as unique_users,

6 APPROX_QUANTILES(session_duration, 100)[OFFSET(50)] as median_session,

7 APPROX_QUANTILES(session_duration, 100)[OFFSET(95)] as p95_session,

8 COUNT(*) as total_events

9FROM `project.dataset.user_events`

10WHERE event_timestamp >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 7 DAY)

11GROUP BY 1, 2

12ORDER BY 1 DESC;The key here is knowing which metrics require exact precision (revenue, transaction counts) versus which benefit from fast approximation (unique users, percentiles).

Alternatives: HyperLogLog sketches provide more control over approximation accuracy but require additional complexity. Streaming analytics can pre-compute real-time aggregations but adds infrastructure overhead. e6data's sub-second query execution often eliminates the need for approximation altogether, delivering exact results with the performance characteristics of approximate queries.

Complex dashboard queries that join 5-8 tables to build executive KPI views often exceed acceptable latency thresholds, especially during peak business hours when slot contention is high. Strategic denormalization pre-joins frequently accessed dimensions, transforming multi-table dashboard queries into single-table scans that execute in seconds rather than minutes.

The transformation looks like this for a typical sales performance dashboard:

1-- Create denormalized table for dashboard performance

2CREATE OR REPLACE TABLE `project.dataset.sales_dashboard_optimized`

3CLUSTER BY region, product_category, customer_segment

4AS SELECT

5 o.order_id,

6 o.order_date,

7 o.revenue,

8 c.customer_segment,

9 c.region,

10 p.product_category,

11 p.product_name,

12 s.salesperson_name,

13 s.sales_territory

14FROM `project.dataset.orders` o

15JOIN `project.dataset.customers` c ON o.customer_id = c.customer_id

16JOIN `project.dataset.products` p ON o.product_id = p.product_id

17JOIN `project.dataset.salespeople` s ON o.salesperson_id = s.salesperson_id;Once you've set this up, your dashboard queries avoid expensive joins and execute consistently fast regardless of concurrent user load. The trade-off is increased storage costs, but you'll find significant query performance improvements for mission-critical dashboards.

Alternatives: Nested and repeated fields maintain relationships without full denormalization but require query logic changes. Views with optimized joins provide abstraction without storage duplication but don't eliminate join costs.

The most expensive way to query data is using SELECT *. When you use SELECT *, BigQuery performs a full scan of every column in the table, leading to unnecessary I/O and materialization costs. Projection optimization is your first line of defense against runaway query costs.

Here's how proper column selection transforms analytical query performance:

1-- Inefficient: scans all columns

2SELECT * FROM `project.dataset.sales`

3WHERE order_date >= '2024-01-01'

4LIMIT 100; -- LIMIT doesn't reduce data scanned!

5

6-- Optimized: scan only required columns

7SELECT

8 order_id,

9 customer_id,

10 revenue,

11 order_date,

12 product_category

13FROM `project.dataset.sales`

14WHERE order_date >= '2024-01-01'

15ORDER BY revenue DESC

16LIMIT 100;

17

18-- Use SELECT * EXCEPT to exclude unnecessary columns

19SELECT * EXCEPT(internal_notes, raw_metadata, debug_info)

20FROM `project.dataset.sales`

21WHERE order_date >= '2024-01-01';Alternatives: Table previews let you explore data structure without full scans. Views can encapsulate column selection logic for repeated use patterns.

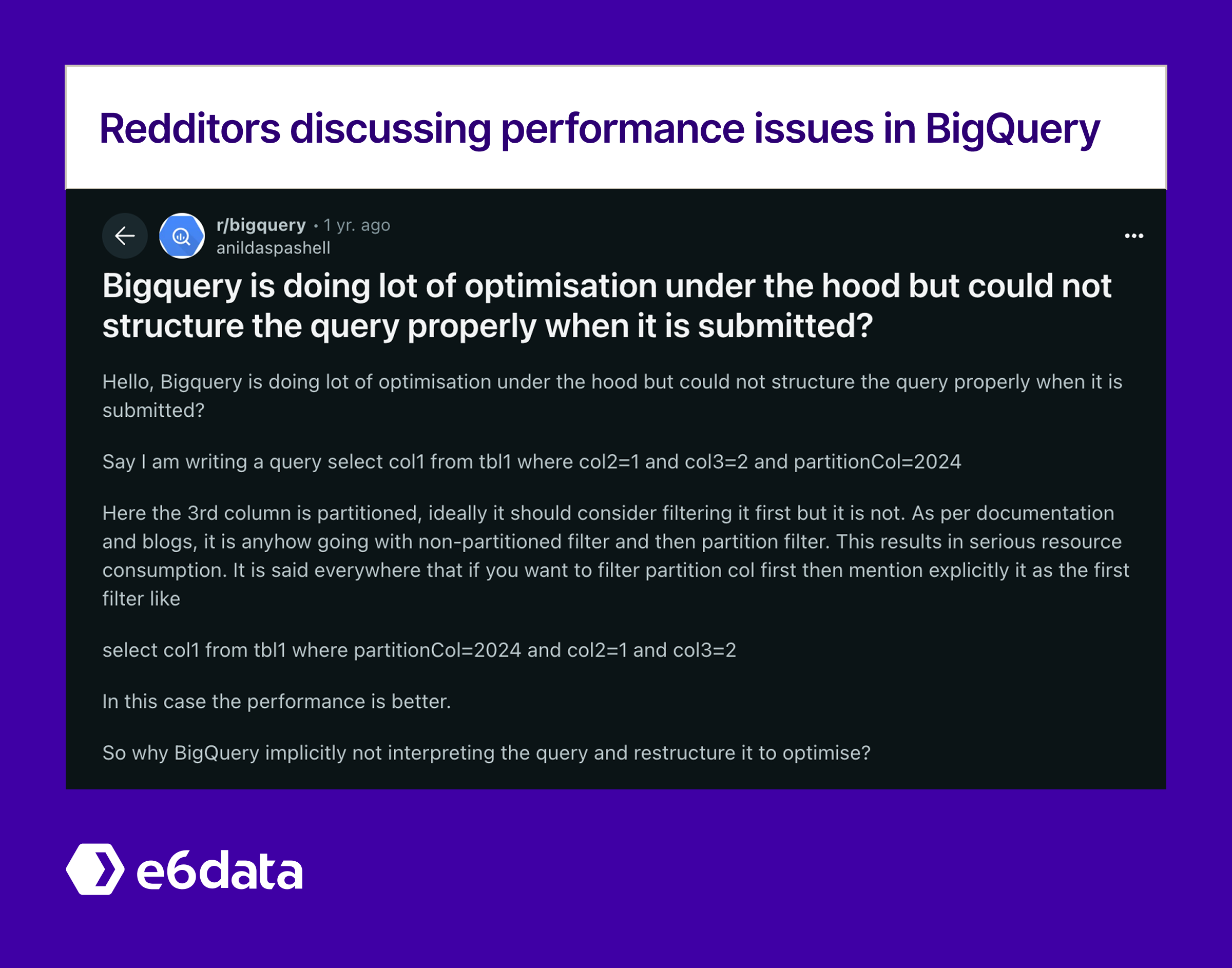

Ad-hoc analytics queries often start broad and iteratively narrow down to specific insights. The difference between scanning 10TB versus 100GB comes down to WHERE clause placement and partition pruning effectiveness. When you structure your exploratory queries to leverage BigQuery's partition and cluster pruning early, you'll dramatically reduce both query costs and execution time.

Here's how strategic WHERE clause optimization transforms exploratory analytics:

1-- Optimize for partition and cluster pruning

2SELECT

3 customer_segment,

4 product_category,

5 SUM(revenue) as segment_revenue,

6 COUNT(DISTINCT customer_id) as unique_customers,

7 AVG(order_value) as avg_order_value

8FROM `project.dataset.sales_partitioned`

9WHERE

10 -- Partition pruning first (most selective)

11 transaction_date BETWEEN '2024-09-01' AND '2024-09-30'

12 -- Cluster pruning second

13 AND region IN ('US-WEST', 'US-EAST')

14 AND product_category IN ('Electronics', 'Software')

15 -- Business logic filters last

16 AND order_value > 100

17 AND customer_segment != 'test'

18GROUP BY 1, 2

19HAVING segment_revenue > 10000

20ORDER BY segment_revenue DESC;

21

22-- Use subqueries for complex filtering logic

23WITH high_value_customers AS (

24 SELECT customer_id

25 FROM `project.dataset.customer_analytics`

26 WHERE lifetime_value > 5000

27 AND last_purchase_date >= '2024-06-01'

28)

29SELECT

30 p.product_name,

31 SUM(s.revenue) as revenue_from_hvcs,

32 COUNT(*) as hvc_purchases

33FROM `project.dataset.sales_partitioned` s

34JOIN high_value_customers h ON s.customer_id = h.customer_id

35JOIN `project.dataset.products` p ON s.product_id = p.product_id

36WHERE s.transaction_date >= '2024-09-01'

37GROUP BY 1

38ORDER BY 2 DESC;Alternatives: Query result caching helps with repeated exploratory patterns but doesn't address initial query costs. Table previews with LIMIT can validate query logic cheaply but may not represent full dataset patterns. For teams requiring unlimited data exploration without cost anxiety, e6data's predictable per-vCPU (cores consumed) pricing eliminates the guesswork in analytical query budgeting.

Multi-table analytical queries often involve joining fact tables with multiple dimension tables, and BigQuery's join performance depends heavily on table ordering and join strategies. When you understand BigQuery's distributed join algorithms, you can structure your analytical queries to minimize data shuffling and reduce execution time from minutes to seconds.

The transformation happens when you apply join optimization principles to typical analytical workloads:

1-- Optimize join order for analytical performance

2WITH filtered_sales AS (

3 SELECT

4 order_id,

5 customer_id,

6 product_id,

7 revenue,

8 order_date

9 FROM `project.dataset.sales`

10 WHERE order_date BETWEEN '2024-09-01' AND '2024-09-30'

11 AND revenue > 50 -- Pre-filter to reduce join volume

12),

13product_metrics AS (

14 SELECT

15 product_id,

16 product_category,

17 product_name,

18 cost_basis

19 FROM `project.dataset.products`

20 WHERE is_active = true

21)

22SELECT

23 p.product_category,

24 c.customer_segment,

25 c.region,

26 SUM(s.revenue) as total_revenue,

27 SUM(s.revenue - p.cost_basis) as gross_profit,

28 COUNT(s.order_id) as order_count

29FROM filtered_sales s

30JOIN product_metrics p ON s.product_id = p.product_id -- Join smaller filtered tables first

31JOIN `project.dataset.customers` c ON s.customer_id = c.customer_id

32GROUP BY 1, 2, 3

33HAVING total_revenue > 1000

34ORDER BY total_revenue DESC;

35

36-- Use ARRAY_AGG for one-to-many relationships

37SELECT

38 c.customer_id,

39 c.customer_name,

40 c.customer_segment,

41 ARRAY_AGG(STRUCT(

42 o.order_date,

43 o.revenue,

44 o.product_category

45 ) ORDER BY o.order_date DESC LIMIT 10) as recent_orders,

46 SUM(o.revenue) as total_customer_value

47FROM `project.dataset.customers` c

48JOIN `project.dataset.sales` o ON c.customer_id = o.customer_id

49WHERE o.order_date >= '2024-01-01'

50GROUP BY 1, 2, 3

51HAVING total_customer_value > 5000;Alternatives: Partitioned joins can improve performance when join keys align with partition columns. Broadcasting smaller tables happens automatically but can be optimized through table sizing.

Advanced analytical queries - cohort analysis, time-series comparisons, running totals, rank calculations - rely heavily on window functions. The challenge in BigQuery is that poorly written window functions can consume massive compute resources, while optimized implementations deliver sophisticated analytics efficiently. Understanding partitioning and ordering strategies transforms expensive analytical queries into performant insights.

Here's how to structure window functions for optimal analytical performance:

1-- Efficient cohort analysis with optimized window functions

2WITH customer_cohorts AS (

3 SELECT

4 customer_id,

5 DATE_TRUNC(MIN(order_date), MONTH) as cohort_month,

6 DATE_TRUNC(order_date, MONTH) as order_month,

7 SUM(revenue) as monthly_revenue

8 FROM `project.dataset.sales`

9 WHERE order_date >= '2024-01-01'

10 GROUP BY 1, 3

11),

12cohort_sizes AS (

13 SELECT

14 cohort_month,

15 COUNT(DISTINCT customer_id) as total_cohort_size

16 FROM customer_cohorts

17 WHERE cohort_month = order_month

18 GROUP BY 1

19),

20cohort_analysis AS (

21 SELECT

22 cc.cohort_month,

23 cc.order_month,

24 DATE_DIFF(cc.order_month, cc.cohort_month, MONTH) as month_number,

25 COUNT(DISTINCT cc.customer_id) as active_customers,

26 SUM(cc.monthly_revenue) as cohort_revenue,

27 cs.total_cohort_size as cohort_size,

28 SUM(cc.monthly_revenue)

29 OVER (PARTITION BY cc.cohort_month ORDER BY cc.order_month

30 ROWS UNBOUNDED PRECEDING) as cumulative_revenue

31 FROM customer_cohorts cc

32 JOIN cohort_sizes cs ON cc.cohort_month = cs.cohort_month

33 GROUP BY 1, 2, 4, 6

34)

35SELECT

36 cohort_month,

37 month_number,

38 active_customers,

39 cohort_size,

40 ROUND(active_customers / cohort_size * 100, 2) as retention_rate,

41 cumulative_revenue,

42 cohort_revenue

43FROM cohort_analysis

44WHERE month_number <= 12

45ORDER BY cohort_month, month_number;

46

47-- Time-series analysis with lag comparisons

48SELECT

49 DATE_TRUNC(order_date, WEEK) as week_start,

50 SUM(revenue) as weekly_revenue,

51 LAG(SUM(revenue), 1) OVER (ORDER BY DATE_TRUNC(order_date, WEEK)) as prev_week_revenue,

52 LAG(SUM(revenue), 52) OVER (ORDER BY DATE_TRUNC(order_date, WEEK)) as yoy_revenue,

53 ROUND(

54 (SUM(revenue) - LAG(SUM(revenue), 1) OVER (ORDER BY DATE_TRUNC(order_date, WEEK))) /

55 LAG(SUM(revenue), 1) OVER (ORDER BY DATE_TRUNC(order_date, WEEK)) * 100, 2

56 ) as wow_growth_pct

57FROM `project.dataset.sales`

58WHERE order_date >= '2023-01-01'

59GROUP BY 1

60ORDER BY 1;When you align window partitions with your analytical dimensions (customer cohorts, product categories, time periods), BigQuery can process these functions much more efficiently.

Alternatives: Array aggregation functions can handle some analytical patterns without window functions. Self-joins provide similar functionality but typically perform worse than optimized window functions. For teams requiring real-time analytical computations with complex window operations, e6data delivers sub-second performance for even the most complex, high-cardinality analytical queries.

Exploratory data analysis on billion-row datasets doesn't always require exact precision - especially during the discovery phase when you're identifying patterns, outliers, or data quality issues. BigQuery's approximation functions enable rapid data exploration by trading minimal accuracy for dramatic performance improvements, letting you iterate through analytical hypotheses quickly.

Here's how approximation functions accelerate large-scale data exploration:

1-- Fast market segment analysis with approximation

2SELECT

3 region,

4 customer_segment,

5 APPROX_COUNT_DISTINCT(customer_id) as approx_customers,

6 APPROX_QUANTILES(order_value, 100)[OFFSET(50)] as median_order_value,

7 APPROX_QUANTILES(order_value, 100)[OFFSET(95)] as p95_order_value,

8 SUM(revenue) as exact_revenue

9FROM `project.dataset.sales`

10WHERE order_date >= '2024-01-01'

11GROUP BY 1, 2

12ORDER BY exact_revenue DESC;BigQuery performance suffers when queries follow common anti-patterns that create unnecessary computational overhead. Understanding and avoiding these patterns is crucial for maintaining analytical query performance at scale.

Here are the key anti-patterns to avoid:

1-- AVOID: Self-joins (use window functions instead)

2-- Anti-pattern

3SELECT

4 a.customer_id,

5 a.order_date,

6 a.revenue,

7 b.prev_order_revenue

8FROM `project.dataset.sales` a

9JOIN `project.dataset.sales` b

10 ON a.customer_id = b.customer_id

11 AND a.order_date > b.order_date;

12

13-- Better: Use window functions

14SELECT

15 customer_id,

16 order_date,

17 revenue,

18 LAG(revenue) OVER (

19 PARTITION BY customer_id

20 ORDER BY order_date

21 ) as prev_order_revenue

22FROM `project.dataset.sales`;

23

24-- AVOID: Cross joins (Cartesian products)

25-- Use pre-aggregation or filtering to reduce cross join output

26WITH top_products AS (

27 SELECT product_id, product_name

28 FROM `project.dataset.products`

29 WHERE category = 'Electronics'

30 LIMIT 10

31),

32top_customers AS (

33 SELECT customer_id, customer_name

34 FROM `project.dataset.customers`

35 WHERE segment = 'Enterprise'

36 LIMIT 50

37)

38SELECT p.product_name, c.customer_name

39FROM top_products p

40CROSS JOIN top_customers c;Additionally, avoid DML statements that update single rows - BigQuery is optimized for batch operations, not OLTP workloads.

Alternatives: Use batch DML operations for updates and inserts. Consider streaming inserts for real-time requirements.

When sorting very large result sets, BigQuery can encounter resource exhaustion because final sorting occurs on a single slot. The combination of ORDER BY with very large datasets often results in "Resources exceeded" errors.

Here's how to handle large-scale sorting efficiently:

1-- AVOID: Sorting massive result sets without LIMIT

2SELECT customer_id, order_date, revenue

3FROM `project.dataset.sales`

4ORDER BY revenue DESC; -- Can overwhelm single slot

5

6-- Better: Use LIMIT with ORDER BY

7SELECT customer_id, order_date, revenue

8FROM `project.dataset.sales`

9ORDER BY revenue DESC

10LIMIT 1000;

11

12-- For pagination: Use OFFSET with LIMIT

13SELECT customer_id, order_date, revenue

14FROM `project.dataset.sales`

15ORDER BY revenue DESC

16LIMIT 1000 OFFSET 5000;

17

18-- For large analytical sorting: Filter first, then sort

19SELECT customer_id, order_date, revenue

20FROM `project.dataset.sales`

21WHERE order_date >= '2024-01-01'

22 AND revenue > 1000

23ORDER BY revenue DESC;The key insight is to reduce the dataset size before sorting, rather than attempting to sort the entire table and then limiting results.

Alternatives: Window functions with RANK() can identify top records without full sorting. Materialized views can pre-sort frequently accessed data.

Large-scale ETL operations in BigQuery require careful attention to data organization strategy. When your daily ETL jobs process hundreds of gigabytes or terabytes, the difference between well-partitioned tables and heap tables can mean the difference between 15-minute loads and 3-hour operations. Strategic partitioning combined with clustering transforms both write performance and downstream query efficiency.

Here's how to structure high-volume ETL operations for optimal performance:

1-- Create optimally partitioned destination table for ETL

2CREATE OR REPLACE TABLE `project.dataset.sales_optimized`

3(

4 transaction_id STRING,

5 customer_id STRING,

6 product_id STRING,

7 transaction_timestamp TIMESTAMP,

8 revenue NUMERIC,

9 region STRING,

10 product_category STRING,

11 customer_segment STRING

12)

13PARTITION BY DATE(transaction_timestamp)

14CLUSTER BY region, product_category, customer_segment

15OPTIONS (

16 partition_expiration_days = 1095, -- 3 years

17 description = "Sales data optimized for analytical workloads"

18);

19

20-- Efficient batch insert with partition alignment

21INSERT INTO `project.dataset.sales_optimized`

22SELECT

23 transaction_id,

24 customer_id,

25 product_id,

26 transaction_timestamp,

27 CAST(revenue as NUMERIC) as revenue,

28 UPPER(TRIM(region)) as region,

29 COALESCE(product_category, 'Unknown') as product_category,

30 CASE

31 WHEN customer_ltv > 5000 THEN 'Enterprise'

32 WHEN customer_ltv > 1000 THEN 'Commercial'

33 ELSE 'SMB'

34 END as customer_segment

35FROM `project.staging.daily_sales_raw`

36WHERE DATE(transaction_timestamp) = CURRENT_DATE - 1 -- Process yesterday's data

37 AND transaction_id IS NOT NULL;

38

39-- Bulk partition maintenance for ETL operations

40DELETE FROM `project.dataset.sales_optimized`

41WHERE DATE(transaction_timestamp) = CURRENT_DATE - 1; -- Clean before reload

42

43-- Efficient MERGE operation for incremental loads

44MERGE `project.dataset.sales_optimized` target

45USING (

46 SELECT

47 transaction_id,

48 customer_id,

49 product_id,

50 transaction_timestamp,

51 revenue,

52 region,

53 product_category,

54 customer_segment

55 FROM `project.staging.daily_sales_incremental`

56 WHERE DATE(transaction_timestamp) = CURRENT_DATE - 1

57) source

58ON target.transaction_id = source.transaction_id

59WHEN MATCHED THEN UPDATE SET

60 revenue = source.revenue,

61 region = source.region,

62 product_category = source.product_category,

63 customer_segment = source.customer_segment

64WHEN NOT MATCHED THEN INSERT (

65 transaction_id, customer_id, product_id, transaction_timestamp,

66 revenue, region, product_category, customer_segment

67) VALUES (

68 source.transaction_id, source.customer_id, source.product_id,

69 source.transaction_timestamp, source.revenue, source.region,

70 source.product_category, source.customer_segment

71);Alternatives: Time-unit column partitioning provides finer granularity than daily partitioning for high-frequency data. Ingestion-time partitioning simplifies ETL logic but may not align with analytical access patterns.

Real-time data pipelines feeding BigQuery require careful attention to streaming buffer management and insertion patterns. The challenge lies in balancing data freshness requirements with cost efficiency - streaming inserts cost more than batch loads, but poorly optimized streaming can result in buffer overflow, duplicate detection issues, and query performance degradation on recently streamed data.

Here's how to structure streaming operations for optimal performance and cost control:

1-- Optimize streaming table structure for real-time ingestion

2CREATE OR REPLACE TABLE `project.dataset.events_streaming`

3(

4 event_id STRING,

5 user_id STRING,

6 event_type STRING,

7 event_timestamp TIMESTAMP,

8 session_id STRING,

9 _insert_timestamp TIMESTAMP DEFAULT CURRENT_TIMESTAMP()

10)

11PARTITION BY DATE(event_timestamp)

12CLUSTER BY event_type, user_id;

13

14-- Query pattern optimized for streaming buffer considerations

15SELECT

16 DATE(event_timestamp) as event_date,

17 event_type,

18 COUNT(DISTINCT user_id) as unique_users,

19 COUNT(*) as total_events

20FROM `project.dataset.events_streaming`

21WHERE event_timestamp >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 1 HOUR)

22GROUP BY 1, 2

23ORDER BY 1, 2;What makes this particularly effective is the separation of hot streaming data from cold analytical data, combined with proper buffer management. You'll find that this approach reduces streaming costs while maintaining query performance on both real-time and historical data.

Alternatives: Dataflow streaming can handle complex streaming transformations before BigQuery insertion but adds infrastructure complexity. Storage Write API provides higher throughput streaming but requires custom integration logic. For streaming workloads requiring guaranteed sub-second latency and unlimited concurrency, e6data processes streaming data with the same performance characteristics as batch operations.

BigQuery supports querying multiple tables using wildcard expressions, but performance suffers when wildcards are too broad or when using legacy date-sharded table patterns. Modern partitioning strategies dramatically outperform date-sharded tables.

Here's how to optimize multi-table querying:

1-- AVOID: Broad wildcard that scans many unnecessary tables

2SELECT *

3FROM `project.dataset.sales_*`

4WHERE _TABLE_SUFFIX BETWEEN '20240101' AND '20240131';

5

6-- Better: Use granular prefixes for wildcards

7SELECT

8 order_id,

9 revenue,

10 order_date

11FROM `project.dataset.sales_202401*`

12WHERE _TABLE_SUFFIX BETWEEN '01' AND '31';

13

14-- Best: Use partitioned tables instead of date-sharded tables

15SELECT

16 order_id,

17 revenue,

18 order_date

19FROM `project.dataset.sales_partitioned`

20WHERE DATE(order_timestamp) BETWEEN '2024-01-01' AND '2024-01-31';Alternatives: Clustered tables combined with partitioning offer optimal performance for multi-dimensional filtering. Views can abstract complex table union logic.

Join performance in BigQuery is significantly impacted by the data types used in join conditions. BigQuery doesn't index primary keys like traditional databases, so wider join columns take longer to compare. Using INT64 data types in joins is cheaper and more efficient than STRING data types.

Here's how data type choice impacts join performance:

1-- Less efficient: STRING joins require more comparison time

2SELECT

3 o.order_id,

4 c.customer_name,

5 o.revenue

6FROM `project.dataset.orders` o

7JOIN `project.dataset.customers` c

8 ON o.customer_uuid = c.customer_uuid; -- STRING comparison

9

10-- More efficient: INT64 joins perform faster comparisons

11SELECT

12 o.order_id,

13 c.customer_name,

14 o.revenue

15FROM `project.dataset.orders` o

16JOIN `project.dataset.customers` c

17 ON o.customer_id = c.customer_id; -- INT64 comparison

18

19-- When STRING joins are necessary, consider hashing

20SELECT

21 o.order_id,

22 c.customer_name,

23 o.revenue

24FROM `project.dataset.orders` o

25JOIN `project.dataset.customers` c

26 ON FARM_FINGERPRINT(o.customer_email) = FARM_FINGERPRINT(c.customer_email);The performance difference becomes more pronounced as join volumes increase. Consider using surrogate INT64 keys for frequently joined dimension tables.

Alternatives: Nested and repeated fields can eliminate joins entirely for one-to-many relationships. Clustering on join keys can improve join performance regardless of data type.

BigQuery automatically caches query results for 24 hours, providing significant cost and performance benefits when identical queries are executed repeatedly. Understanding how to leverage caching effectively can dramatically reduce development and debugging costs.

For predictable workloads and cost management, BigQuery Reservations provide guaranteed capacity and improved performance isolation. Understanding slot management is crucial for enterprise-scale operations.

Here's how to monitor and optimize slot usage:

1-- Monitor slot usage patterns

2SELECT

3 job_id,

4 user_email,

5 project_id,

6 creation_time,

7 total_slot_ms,

8 total_bytes_processed,

9 total_bytes_billed,

10 ROUND(total_slot_ms / 1000, 2) as total_slot_seconds,

11 statement_type

12FROM `region-us.INFORMATION_SCHEMA.JOBS_BY_PROJECT`

13WHERE creation_time >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 1 DAY)

14 AND state = 'DONE'

15 AND total_slot_ms > 0

16ORDER BY total_slot_ms DESC

17LIMIT 20;

18

19-- Analyze query performance trends

20SELECT

21 DATE(creation_time) as query_date,

22 statement_type,

23 COUNT(*) as query_count,

24 AVG(total_slot_ms / 1000) as avg_slot_seconds,

25 AVG(total_bytes_processed / POW(10, 12)) as avg_tb_processed,

26 MAX(total_slot_ms / 1000) as max_slot_seconds

27FROM `region-us.INFORMATION_SCHEMA.JOBS_BY_PROJECT`

28WHERE creation_time >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 7 DAY)

29 AND state = 'DONE'

30 AND total_slot_ms > 0

31GROUP BY 1, 2

32ORDER BY 1 DESC, 4 DESC;Key slot management strategies:

Force queries to include partition filters to prevent expensive full table scans:

1-- Require partition filter on large tables

2ALTER TABLE `project.dataset.sales_partitioned`

3SET OPTIONS (

4 require_partition_filter = true,

5 partition_expiration_days = 365

6);

7

8-- Queries must now include partition filter

9SELECT customer_id, revenue, order_date

10FROM `project.dataset.sales_partitioned`

11WHERE DATE(order_timestamp) = '2024-09-15' -- Required!

12 AND region = 'US-WEST';Alternatives: Custom cost controls can limit query costs at project or user level. Query validator provides cost estimates before execution.

Even after implementing clustered tables for dashboard performance, materialized views for aggregation optimization, strategic denormalization, and intelligent query pruning, some BI/SQL workloads still face performance bottlenecks. That's where e6data comes in.

e6data is a decentralized, Kubernetes-native lakehouse compute engine delivering 10x faster query performance with 60% lower compute costs through per-vCPU billing and zero data movement. It runs directly on existing data formats (Delta/Iceberg/Hudi, Parquet, CSV, JSON), requiring no migration or rewrites. Teams often keep their existing BigQuery platform for development workflows while offloading performance-critical queries to e6data for sub-second latency and 1000+ QPS concurrency.

Key benefits of the e6data approach:

Start a free trial of e6data and see performance comparison on your own workloads. Use our cost calculator to estimate potential gains.