Databricks is a one-stop shop for SQL analytics, data engineering, and machine learning. But with great power comes… a great cloud bill. If you’ve ever left a cluster running over a long weekend or spun up a 64-core monster “just to be safe,” you know how quickly costs can spiral out of control. We’ve seen teams double their Databricks spend in a single quarter by running clusters 24/7 with low utilization. The good news is that reining in those costs doesn’t mean slowing down your projects. In fact, it’s straightforward: match your compute resources to actual workload demand, fine-tune your tables and jobs for efficiency, and set up guardrails so nothing runs amok on your dime.

The upside: Databricks gives you plenty of levers and telemetry to optimize costs – from cluster auto-scaling and spot instances to Delta Lake optimizations and detailed usage logs. Each tactic we cover below is mapped to measurable savings. This guide is organized by workload pattern (BI dashboards, ad-hoc analytics, and ETL/streaming pipelines) so you can jump to what matters most. We’ll show code snippets and configs you can apply today to start saving.

Let’s dive in!

What counts as a “red flag” in your Databricks bill? Here are some yardsticks to identify inefficient usage before finance comes knocking:\

If several of these flags apply, it’s time to take action. Pro tip: Set up monitoring and alerts on these metrics. Databricks provides system tables and usage reports to audit per-cluster utilization, and you can even configure budget alerts to get notified when spend approaches a limit. Also consider tagging your Databricks resources by project or team to track who’s using what – many organizations require cost-center tags on clusters for chargeback reporting. This governance makes it easier to identify which workloads are driving costs and prevent “mystery” expenses.

Before jumping into fixes, figure out which type of workload is eating most of your Databricks budget. The optimization tactics will differ for each pattern:

How to use this guide: We’ll tackle each of the above scenarios in turn. For each, we outline why the optimization matters, how to implement it (with code or config examples), any alternatives or trade-offs to consider, and the expected savings. Our focus is on practical, hands-on tips that a senior data engineer can apply right away. Let’s start with BI dashboards.

Typical cost pitfalls: Keeping large warehouses running during off-hours, and queries that don’t leverage result caching or pre-aggregated data.

Don’t use a fixed-size warehouse for dashboards that have variable or infrequent load. Instead, enable aggressive auto-scaling and auto-stopping so you pay only for the compute you need. Databricks SQL Warehouses support elastic scaling out/in based on load, and can auto-stop when idle.

For example, here’s a JSON config for a warehouse that starts at 1 cluster and can scale up to 8 clusters, with a 10-minute auto-stop (meaning if no queries run for 10 min, it shuts down):

1{

2 "name": "bi_warehouse_smart_scaling",

3 "cluster_size": "Small",

4 "min_num_clusters": 1,

5 "max_num_clusters": 8,

6 "auto_stop_mins": 10,

7 "scaling_policy": {

8 "target_utilization": 70,

9 "scale_out_threshold": 80,

10 "scale_in_threshold": 30,

11 "scale_out_cooldown": "2m",

12 "scale_in_cooldown": "5m"

13 }

14}In the Databricks SQL UI, this corresponds to setting a small starting warehouse with auto-scaling up to a larger size, and short auto-stop timeout.

Trade-off: You might incur a 2–3 minute lag when scaling up if a burst of queries comes in (cold-start latency), but the savings during idle hours are enormous. Most dashboards can tolerate a bit of spin-up time for the first query at 8:00am. The ~75% savings comes from not running an 8-node warehouse all night when nobody’s querying.

Pro tip: If your concurrency rarely exceeds 1–2 queries at a time, even during peak, consider keeping the max clusters low. On the flip side, if you have unpredictable spikes, set max_num_clusters high but with target_utilization around 70% so it adds capacity only when needed. Monitor the cluster’s CPU utilization over time to fine-tune these settings.

A huge benefit of Databricks SQL is the result cache: if the same query text is run again, the results can be served from cache instantly (no recompute) as long as the underlying data hasn’t changed. But to reap this benefit, your queries must be written in a cache-friendly way. In practice, that means using stable time boundaries and deterministic SQL rather than non-deterministic filters.

Example:

1-- Cache-unfriendly: Dynamic timestamps

2SELECT DATE(order_time) as day, SUM(amount) as revenue

3FROM orders

4WHERE order_time >= NOW() - INTERVAL 30 DAYS;

5

6-- Cache-friendly: Stable boundaries

7SELECT DATE(order_time) as day, SUM(amount) as revenue

8FROM orders

9WHERE DATE(order_time) >= CURRENT_DATE() - INTERVAL 30 DAYS

10 AND DATE(order_time) < CURRENT_DATE();Enable warehouse-level caching and Photon acceleration:

1ALTER WAREHOUSE bi_warehouse_smart_scaling

2SET ENABLE_RESULT_CACHE = true,

3 RESULT_CACHE_TTL = 3600,

4 ENABLE_PHOTON = true;If your dashboards are querying Delta Lake tables, how those tables are organized on storage can make a huge difference in query cost. Two big tools here are Z-Ordering and Delta’s new liquid clustering. The idea is to cluster the data on disk by the fields that your queries filter by most often, so that those queries read less data (skip data that doesn’t match the filter thanks to file statistics).

Step 1: Identify query patterns. Use the query history to find which columns are frequently used in filters or groupings. For example, let’s say many dashboard queries hit a shipments table and filter by ship_date or region:

1-- Find most frequent queries on the 'shipments' table in our BI warehouse

2SELECT query_text,

3COUNT(*) AS freq

4FROM system.query.history

5 WHERE warehouse_name = 'bi_warehouse_smart_scaling'

6 AND query_text LIKE '%shipments%'

7 GROUP BY query_text

8 ORDER BY freq DESC;(You could also parse the query plans via EXPLAIN or use the Databricks query history UI.)

Step 2: Apply layout optimizations. Suppose we see that ship_date, region, and status are common filter columns. We can reorganize the Delta table so these columns are indexed/clustering keys:

1-- Cluster the 'shipments' Delta table by ship_date and region for faster filtering

2OPTIMIZE shipments

3ZORDER BY (ship_date, region, status);

4

5ALTER TABLE shipments

6SET TBLPROPERTIES (

7 'delta.feature.liquid' = 'supported',

8 'delta.clustering.columns' = 'ship_date,region',

9 'delta.autoOptimize.optimizeWrite' = 'true',

10 'delta.autoOptimize.autoCompact' = 'true'

11);The OPTIMIZE ... ZORDER will rewrite the table’s small files into larger files clustered on ship_date, region, status. Enabling the Delta table properties for liquid clustering means Databricks will continuously optimize the data clustering in the background (available in newer DBR versions), and autoOptimize settings ensure that as new data comes in, it’s automatically compacted and optimized without manual intervention.

Alternatives:

If your dashboard or reporting workload is infrequent (e.g., a weekly executive report) or has very bursty traffic, consider using Databricks Serverless SQL Warehouses. Serverless warehouses spin up and down in a few seconds and you only pay when queries are running. This can cut costs dramatically for intermittent workloads, since you don’t have an always-on cluster at all.

For example, you might configure a small serverless warehouse that auto-stops after 2 minutes of inactivity:

1{

2 "name": "executive_reporting_serverless",

3 "warehouse_type": "PRO",

4 "serverless": true,

5 "auto_stop_mins": 2,

6 "max_num_clusters": 4

7}In practice, this means if a VP opens a dashboard at 9am on Monday, the warehouse will spin up on the fly (a cold start of ~30 seconds) then run the queries, and shut off by 9:03am once idle. The cost for the rest of the week when no one is querying is $0. Compare that to a classic cluster that might be left running “just in case”.

Trade-off: Serverless has a cold start and can be slightly more per-second cost than a normal warehouse. There’s about a 30–60 second latency when a serverless SQL endpoint starts from cold. But if your usage is infrequent, the savings by not running idle far outweigh this. Also, serverless warehouses currently have certain region and size limitations (check Databricks docs), but they can scale out to handle concurrency if needed (max_num_clusters above).

Not all dashboards need to refresh on a fixed schedule. If the data a dashboard uses hasn’t changed, why run the queries again? A smarter approach is event-driven refresh: only update the dashboard when new data arrives or when an ETL pipeline completes.

You can implement this by hooking into Delta Lake’s transaction log or using job hooks. For example, consider a dashboard that depends on a Delta table sales. We can track the last update timestamp of that table and only refresh the dashboard if there’s a newer update:

1# Pseudo-code for conditional dashboard refresh

2import requests

3from delta.tables import DeltaTable

4

5def trigger_conditional_refresh(warehouse_id, dashboard_id, source_table):

6 dt = DeltaTable.forName(spark, source_table)

7 last_update_time = dt.history(1).select("timestamp").collect()[0]["timestamp"]

8 last_refresh_time = get_last_refresh_time(dashboard_id) # Your logic to track last refresh

9

10 if last_update_time > last_refresh_time:

11 # Start the SQL warehouse if not running

12 requests.post(

13 f"https://{workspace_url}/api/2.0/sql/warehouses/{warehouse_id}/start",

14 headers={"Authorization": f"Bearer {access_token}"}

15 )

16 refresh_dashboard(dashboard_id) # Call DBSQL API or internal API to refresh the dashboard

17 update_refresh_metadata(dashboard_id, last_update_time)Architecture tip: Integrate this with your ETL. For instance, when your ETL job finishes writing to sales Delta table, it can call a function or send a message (via webhook or Pub/Sub) that triggers the dashboard refresh logic. Essentially: ETL complete → Delta updated → trigger refresh. This event-driven approach can eliminate almost all redundant refresh queries. If your current schedule refreshes every 15 minutes “just in case” but data only changes a few times a day, you’re looking at >90% reduction in query load by switching to event-driven updates.

Typical cost pitfalls: Long-lived interactive clusters that nobody shut down, and “oops” queries that scan a 10 TB table when you only needed a sample.

When doing ad-hoc work, waiting 5+ minutes for a cluster to spin up is painful, so teams sometimes keep an interactive cluster running all day to avoid startup lag. That convenience costs money as the cluster idles. Cluster pools solve this by keeping a small set of ready-to-go instances on standby, dramatically cutting startup time without running full clusters continuously.

A cluster pool is a managed pool of VMs that clusters can draw from. You might configure a pool like this:

1{

2 "cluster_pool_name": "analytics_pool",

3 "min_idle_instances": 2,

4 "max_capacity": 10,

5 "node_type_id": "i3.xlarge",

6 "preloaded_spark_versions": ["11.3.x-scala2.12"],

7 "idle_instance_autotermination_minutes": 30

8}This pool keeps 2 instances warm and can scale to 10. Your interactive clusters (for notebooks) are then created using the pool. When a user requests a cluster, if idle instances are available, it attaches in seconds (no cloud VM boot needed). If the pool is empty, it will spin up a new instance (a bit slower, but you can adjust min_idle_instances to balance readiness vs. cost).

Trade-off: You pay a small cost to keep those 2 idle instances (“pool maintenance cost”), but it’s much less than keeping an entire interactive cluster of 8 nodes running. And importantly, when nobody is using Databricks, the pool will auto-terminate those instances after 30 minutes idle above. Overall, teams have seen ~40% cost reduction by using pools because they remove the need for always-on clusters while still giving nearly instant start times.

To set this up, go to the Compute section, create a Pool with the desired settings, then update your cluster configs (or cluster policies) to use the pool. Databricks will handle the rest.

How many times have we or a teammate run a few queries and then gone to lunch, leaving the cluster running for hours? Auto-termination is your safety net. It shuts down a cluster after a period of inactivity. By default, interactive clusters might have a 120 minute auto-terminate – you should crank this way down (e.g., 15 minutes) for ad-hoc clusters.

Better yet, use a Cluster Policy to enforce it across the board so even if someone forgets, the policy has your back. For example:

1{

2 "cluster_policy_name": "analytics_cost_control",

3 "definition": {

4 "autotermination_minutes": { "type": "fixed", "value": 15 },

5 "spark.databricks.cluster.profile": { "type": "allowlist", "values": ["singleNode", "serverless"] },

6 "instance_pool_id": { "type": "fixed", "value": "<ID of analytics_pool>" }

7 }

8}This policy (which an admin can set in the Databricks UI) does a few things: it fixes auto-terminate at 15 minutes idle, restricts cluster types to single node or serverless (as examples of cheaper options), and forces clusters to use the instance pool we created earlier. In essence, any ad-hoc cluster launched under this policy will auto-shutdown quickly and use cost-efficient resources.

Speaking of forgotten workloads, also keep an eye out for “zombie jobs” – scheduled jobs or streaming queries that were left running unintentionally. It’s good practice to set timeouts or alerts on job runtimes. Databricks even has a Query Watchdog for interactive clusters, which by default will cancel queries that are producing insane output.

When spinning up a notebook cluster, many folks just select a generic Large cluster (because “why not, I might need it”). In reality, the optimal cluster size depends on what you’re doing – and picking a smaller cluster for lighter tasks can save a ton. As a senior data engineer, you can provide guidelines or even automated recommendations for your team.

For example, here’s a simple Python function that suggests a cluster size based on operation type and data size:

1def recommend_cluster_size(operation_type, data_size_gb):

2 templates = {

3 "profiling": {"cores": 8, "memory": "32g"},

4 "feature_engineering": {"cores": 16, "memory": "64g"},

5 "model_training": {"cores": 32, "memory": "128g"},

6 "large_join": {"cores": 64, "memory": "256g"}

7 }

8 config = templates.get(operation_type, templates["feature_engineering"])

9 if data_size_gb > 100:

10 # Scale up if data is very large

11 config = {

12 "cores": config["cores"] * 2,

13 "memory": f"{int(config['memory'][:-1]) * 2}g"

14 }

15 return configFinally, consider using auto-scaling even on interactive clusters when possible. If a user cluster might sometimes need 8 nodes but usually only 2, give it a range (2–8) so it scales up on demand. This combines well with auto-termination to keep costs tight.

For analysis jobs or notebooks that can handle the occasional interruption, use cloud spot instances (aka AWS spot, Azure low-priority, GCP preemptible VMs) for the worker nodes. Spot instances are heavily discounted (often 60–90% off) because the cloud can take them back with short notice.

Here’s an example cluster config enabling spot:

1{

2 "cluster_name": "analytics_spot_optimized",

3 "node_type_id": "i3.xlarge",

4 "num_workers": 4,

5 "autoscale": { "min_workers": 2, "max_workers": 8 },

6 "aws_attributes": {

7 "first_on_demand": 1,

8 "availability": "SPOT_WITH_FALLBACK",

9 "spot_bid_price_percent": 100

10 }

11}In this configuration:

availability: SPOT_WITH_FALLBACK means try to get spot instances for workers, but if none available, use on-demand so the cluster still functions.first_on_demand: 1 keeps at least one on-demand node (often the driver) to reduce risk of the whole cluster vanishing.spot_bid_price_percent: 100 means we’re willing to pay up to 100% of on-demand price for spot (you can adjust if you want to cap lower).For ad-hoc workloads, this can drastically cut costs, especially if you’re running large clusters for short periods. The main consideration is fault tolerance: if a spot VM is reclaimed, Spark will retry those tasks on another node, which might slow the job slightly. It’s usually fine for batch jobs or notebook queries – just avoid spot for stateful long-running processes that can’t handle restarts.

When exploring a new dataset, it’s tempting to run SELECT * or compute aggregates on the entire table. But if the table is huge (say, a billion rows), that exploration could cost as much as a full production job. The smarter approach is progressive sampling: start with a small sample, iterate, and only scan the whole dataset when you’re confident you need to.

Databricks (via Spark SQL) supports SQL sampling and limiting. For example:

1-- Create a 1% sample table for quick exploration

2CREATE OR REPLACE TABLE customer_events_sample_1pct AS

3SELECT *

4FROM customer_events

5TABLESAMPLE (1 PERCENT) REPEATABLE(42);

6

7-- Create a 10% sample for more in-depth testing

8CREATE OR REPLACE TABLE customer_events_sample_10pct AS

9SELECT *

10FROM customer_events

11TABLESAMPLE (10 PERCENT) REPEATABLE(42);Here we took customer_events and made two smaller tables: one with 1% of data, one with 10%. We used REPEATABLE(42) so the sample is deterministic (same 1% each time, for consistency). Now an analyst can first run queries on customer_events_sample_1pc. If results look promising or need more data, move to 10%. Only if absolutely needed, run on the full customer_events. Often this process finds issues or answers questions without ever touching the full set until the final step.

Typical cost pitfalls: One-size-fits-all clusters for pipelines that have distinct stages, doing full table refreshes when only 5% of data changed, excessive shuffles or unpartitioned writes causing extra work, and streaming jobs running at peak provisioning even when input rates are low.

In a typical ETL job, you might have stages like ingestion (reading raw data), transformation (joining, enriching), and aggregation (calculating summaries). These stages have different resource needs: ingestion might be I/O bound, transformation CPU/memory bound, etc. If you run them all on one fixed cluster, you either over-provision to handle the hardest stage (wasting resources on the lighter stages) or under-provision and slow down the heavy stage.

The solution: Use job clusters tuned per stage. In Databricks Jobs, you can define multiple tasks with their own clusters (or in workflows, chain jobs). Give each stage a cluster spec that fits its load.

You would use this by configuring your job with multiple tasks, each task using a new job cluster defined by such a function. After one stage finishes and its cluster auto-terminates, the next stage starts on its own cluster.

Also, by using spot instances in these batch jobs (as shown), you save further on the cost per node. Just ensure you have retry logic or fault tolerance for spot interruptions (Spark will usually retry failed tasks automatically).

Sometimes the easiest way to reduce costs is simply to make the job do less work. That comes down to Spark-level optimizations. As a senior DE, you likely know these, but it’s worth emphasizing:

Enable Adaptive Query Execution (AQE): This is a must for Spark 3+ on Databricks. It lets Spark optimize joins and partitions on the fly. Make sure it’s on:

1spark.conf.set("spark.sql.adaptive.enabled", "true")

2spark.conf.set("spark.sql.adaptive.coalescePartitions.enabled", "true")

3spark.conf.set("spark.sql.adaptive.skewJoin.enabled", "true")

4spark.conf.set("spark.sql.adaptive.localShuffleReader.enabled", "true")Databricks runtime usually has AQE on by default now, but double-check the skew join handling especially – it can automatically handle data skew by splitting tasks, which prevents one task from dragging out the job.

Optimize Delta writes: If your ETL writes to Delta Lake, use optimizeWrite and autoCompact to avoid a ton of small files:

1spark.conf.set("spark.databricks.delta.optimizeWrite.enabled", "true")

2spark.conf.set("spark.databricks.delta.autoCompact.enabled", "true")These ensure that as you write out data, Databricks will coalesce small files and target an optimal file size (~128MB), which makes reads faster later and reduces metadata overhead.

Cache smartly: If your job reads the same DataFrame multiple times (say you do a costly lookup or join repeatedly), consider caching it in memory (df.cache()) for the duration of the job. But be mindful to unpersist or not cache huge data that doesn’t fit, as that can backfire. Use caching for moderately sized, frequently reused datasets within a single job run.

Repartition before writing large outputs: If you’re writing a large table, repartition by a key or to a certain number of files to balance file sizes. For example, if you have a skewed key, partitioning by that key can concentrate data nicely. Aim for output files ~100MB+ in size to avoid too many tiny files.

After writes, it’s often beneficial to run an explicit OPTIMIZE (for Delta) or compaction step, if your pipeline can afford it, to pack the files. This can be done asynchronously as well.

Monitoring tip: Keep an eye on the Spark UI or execution metrics for your jobs. If you see a lot of spilled data to disk or a long tail in tasks due to skew, that’s a sign to tweak partitions or add a salt key to even out data. Reducing shuffle partitions (spark.sql.shuffle.partitions) from the default 200 (if your data isn’t huge) can also cut overhead.

Not every ETL needs to rebuild the entire table every run. If your source data or business logic only changes a portion of the data each day, you can use incremental processing to only handle new or changed records. Databricks Delta has a feature called Change Data Feed (CDF) that makes this easier by tracking row-level inserts, updates, deletes.

By enabling CDF on a Delta table, you can query the changes that happened since a certain version or timestamp. Here’s how you turn it on:

1ALTER TABLE transactions

2SET TBLPROPERTIES (delta.enableChangeDataFeed = true);Now, suppose you have a pipeline that updates a target table based on a source Delta table (transactions). Instead of reading the whole source, you can just read the CDF:

1from delta.tables import DeltaTable

2

3def incremental_etl_pipeline(source_table, target_table):

4 # Get last processed version from a checkpoint tracking table

5 last_proc_version = spark.sql(f"SELECT last_processed_version FROM etl_checkpoint WHERE table_name = '{source_table}'").collect()

6 start_version = (last_proc_version[0]['last_processed_version'] + 1) if last_proc_version else 0

7

8 # Read changes since last processed version

9 changes_df = (spark.read.format("delta")

10 .option("readChangeData", "true")

11 .option("startingVersion", start_version)

12 .table(source_table))

13

14 # Filter to only inserts/updates (ignore deletes or handle them accordingly)

15 from pyspark.sql.functions import col

16 new_or_updated = changes_df.filter(col("_change_type").isin(["insert", "update"]))

17

18 # Merge into target table (upsert logic)

19 DeltaTable.forName(spark, target_table).alias("t") \

20 .merge(new_or_updated.alias("c"), "t.id = c.id") \

21 .whenMatchedUpdateAll() \

22 .whenNotMatchedInsertAll() \

23 .execute()

24

25 # Update checkpoint

26 latest_version = spark.sql(f"DESCRIBE HISTORY {source_table} LIMIT 1").collect()[0]["version"]

27 spark.sql(f"MERGE INTO etl_checkpoint USING (SELECT '{source_table}' AS table_name, {latest_version} AS last_processed_version) src ON etl_checkpoint.table_name = src.table_name WHEN MATCHED THEN UPDATE SET last_processed_version = src.last_processed_version WHEN NOT MATCHED THEN INSERT *")The above is a bit involved, but the gist is:

readChangeData with a starting version).The payoff is massive: if only 5% of rows changed since last run, you’re now processing 5% of the data instead of 100%. That’s an instant ~95% savings in compute, more or less. Even if 20% changed, that’s 80% less to process.

Monitoring: Keep an eye on how much data CDF is pulling. You can inspect the # of output rows in the Spark plan or just count the changes_df. If you notice the “change data” is approaching the size of full data frequently, it might mean you need to do a full recompute occasionally (or that your incremental logic could be improved). But for many slowly changing tables, CDF is a game-changer for cost.

One caveat: CDF retains change logs, which can add to storage costs if not managed. You might need to vacuum or set retention intervals depending on your data retention policy. But storage is usually cheaper than compute.

This one’s almost a freebie: always run production jobs on Job Compute (i.e., job clusters), not on an all-purpose interactive cluster. Databricks charges different rates for all-purpose vs. job clusters – job clusters are significantly cheaper per DBU. Plus, a job cluster is ephemeral: it spins up for the job and shuts down after, so you don’t pay for idle time between scheduled runs.

Here’s how you might define a job with an embedded cluster (using the Jobs API or UI) – for example, via JSON:

1{

2 "job_clusters": [

3 {

4 "job_cluster_key": "etl_ingestion_cluster",

5 "new_cluster": {

6 "node_type_id": "i3.xlarge",

7 "num_workers": 4,

8 "spark_version": "11.3.x-scala2.12",

9 "aws_attributes": {

10 "availability": "SPOT_WITH_FALLBACK",

11 "spot_bid_price_percent": 100,

12 "first_on_demand": 1

13 },

14 "autotermination_minutes": 0,

15 "spark_conf": {

16 "spark.sql.adaptive.enabled": "true"

17 }

18 }

19 }

20 ],

21 "tasks": [ ... ] // define tasks that use "etl_ingestion_cluster"

22}Scheduling tip: You can create multi-task jobs where different tasks use different job_clusters configurations. This ties into the stage-specific sizing from tactic #1 – in one job definition you could have an “ingest task” using a smaller cluster and a “transform task” using a larger cluster, etc., all orchestrated together. The platform will handle spinning each up when needed. This way, each part of your pipeline only runs on the resources it truly needs.

Streaming Structured Streaming jobs can be cost hogs if not configured wisely, because they run 24/7. Two big opportunities for savings: auto-scaling the stream processing and tuning triggers/batch intervals to your actual data rate and SLA.

Auto-Scale Streaming: Spark Structured Streaming can dynamically adjust the number of executors based on the incoming data rate (micro-batch queue backlog). Make sure you’ve enabled spark streaming dynamic allocation.

Adaptive Batch Interval: If your streaming job doesn’t need sub-second latency, use a slightly longer trigger interval during low load. For instance, if the stream can process 1 minute of data in 10 seconds, you might set a trigger interval of 1 minute. That way, it batches data and processes a minute at a time, letting the cluster possibly go idle (and even auto-scale down) in between if it catches up. On the other hand, if a burst comes in, the backlog will cause Spark to add executors (up to the max) to catch up.

Backpressure and State Management: Spark will automatically adjust the batch size to handle bursts (that’s backpressure). You should also use watermarks to limit stateful aggregation windows, so that old state can be dropped and memory usage stays in check. And periodically checkpoint the stream to durable storage (which is required anyway for recovery) – storing checkpoints compressed and cleaning them up if possible can save some storage and overhead.

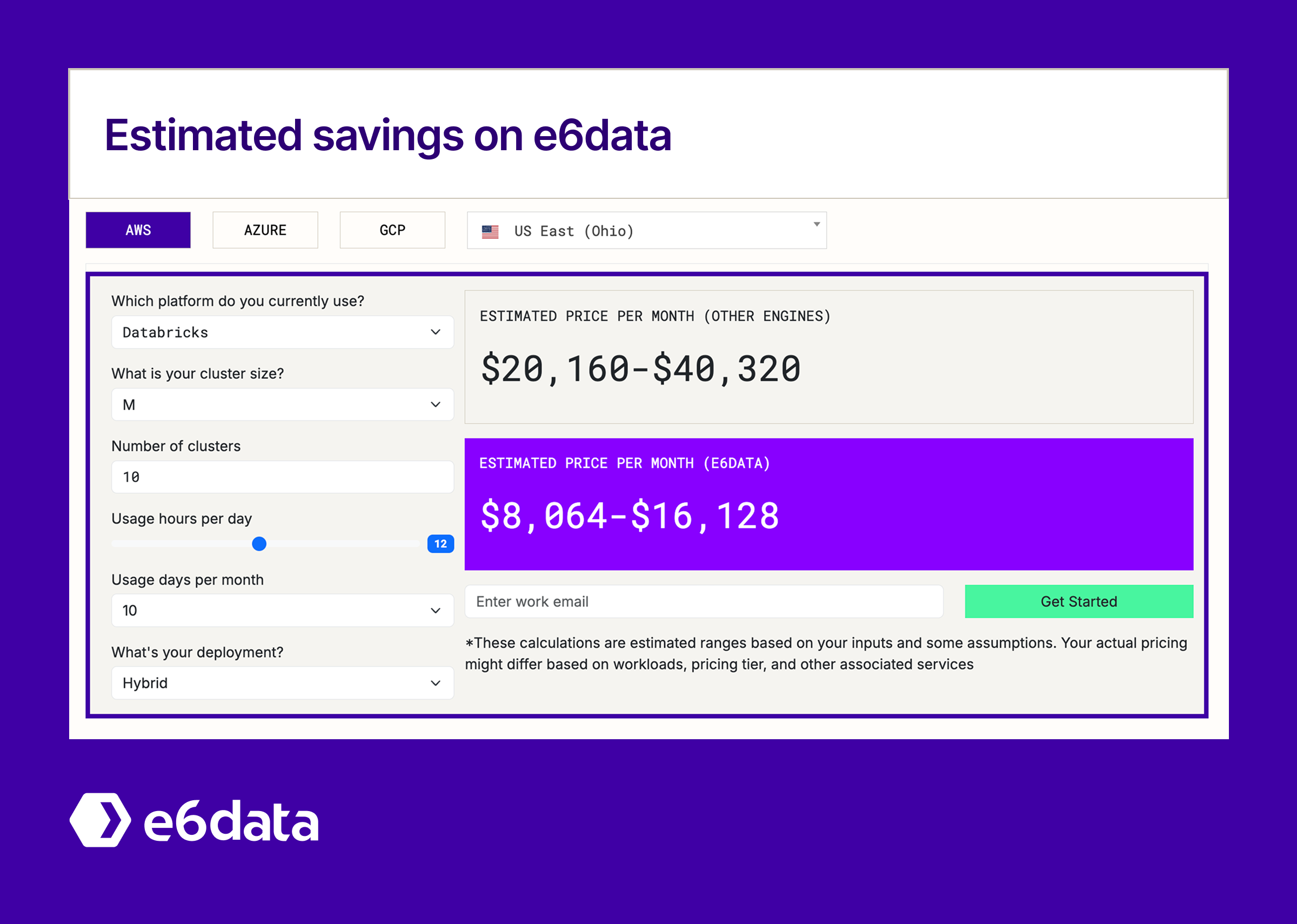

e6data offers a lakehouse query engine with per-vCPU pricing – it scales compute atomically to the demand of each query, with no need to manage clusters at all. It can query the same data (Delta tables, Parquet, etc.) without migration. Many teams use Databricks for collaborative development and heavy ML, but offload cost-sensitive BI analytics to e6data to capture additional savings.

Key benefits of the e6data approach:

➡️ Interested in exploring this? Start a free trial of e6data and see how it compares on your own workloads.